Artificial intelligence is getting so advanced it can now generate its own hype (yes, an AI chatbot wrote that joke).

But seriously, now that even the most analog among us is familiar with the words “OpenAI” and “ChatGPT,” we figured it was time to dig into the nuts and bolts of this technology. AI makes lots of headlines, but there are still plenty of questions about how to use it to build better products.

We here at Common Room are big believers in the transformative power of AI (it’s one of our data science pillars!). More specifically, how we can use it to help our customers. That’s why we’ve been accelerating our product with OpenAI’s large language models (LLM) for well over a year.

We’ve learned a lot in that time. Whether you’re looking to incorporate AI into your own product development—or just want a peek behind the Common Room curtain—these are the top five lessons we’ve learned building product with OpenAI.

You can’t spell ‘explainer’ without AI

Before we jump into our learnings, it’s helpful to understand how we use AI at Common Room.

Our product is designed to help organizations bring together vast amounts of data, make sense of it, and take action on it—fast. Basically, our job is to help companies quickly spot the signal from the noise and take the right next steps, no matter where a customer is in their journey.

Large language models (LLMs) are a big part of that. The ability to detect sentiment, group content into relevant topics, and categorize conversations—it’s all dependent on LLMs. And that’s where OpenAI comes in.

Long before GPT-4, we were using OpenAI’s LLM models to beef up our NLP capabilities. We started with davinci, the most advanced GPT-3 model at that time. And as OpenAI’s models evolved, so did ours. It’s what’s allowed our small-yet-mighty team to release multiple NLP features so quickly.

So, without further ado, here are our top five takeaways:

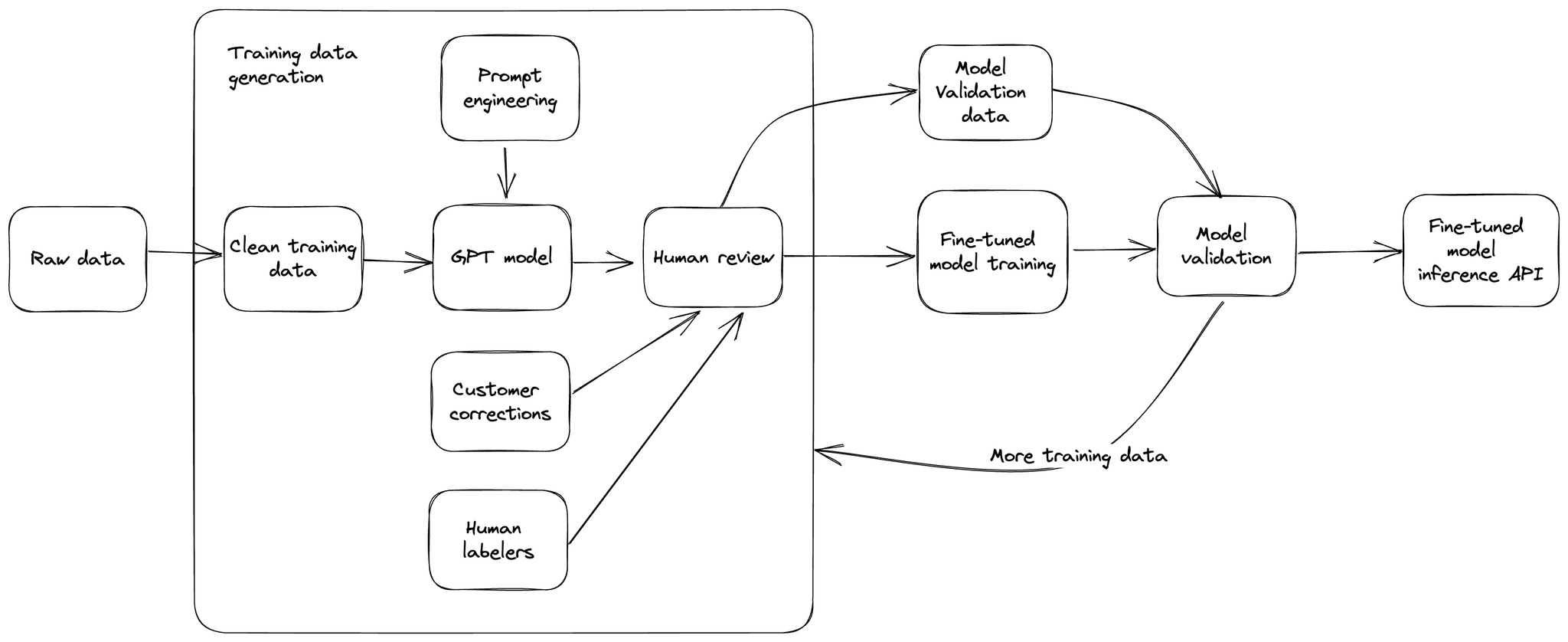

1. Fine-tuned models may be your best bet

ChatGPT has been pre-trained on a massive amount of text from the open internet. When given a prompt with just a few examples, it can often intuit what task you’re trying to perform and generate a plausible completion. This is called "few-shot learning" or “zero-shot learning.”

And while you can use pre-trained foundational models directly in production, the cost can add up. Even if we were using a model that cost $0.002 per 1K tokens, if you consider that each sentence may eat up 30 tokens—and a conversation in a community may contain 3-4 sentences—you get a sense of how quickly costs can rise across a large customer base that includes well-known communities such as Snowflake, Atlassian, Figma, and even OpenAI itself.

Our solution was to train models on our own dataset via fine-tuning. Running a fine-tuned model was less than one-eighth the price!

But fine-tuned models are about more than cost control. Even as AI technology gets more sophisticated, zero-shot learning can produce incorrect results. This is where humans in the loop become critical. Annotating the results we wanted our model to learn and then fine-tuning the model based on that made for more accurate (not to mention more affordable) performance.

Here's an example of why fine-tuning is helpful for trickier cases:

Common Room: Determine the sentiment of this message and explain what contributes to it: “I hate how good this presentation was!”

AI model: The sentiment of this message is negative. This is because the word “hate” is used, which is a strong negative emotion. Additionally, the phrase “how good” implies that the speaker is surprised by the quality of the presentation, which further contributes to the negative sentiment.

2. The cleaner the data, the better the results

The importance of clean data goes without saying, but it was especially important for our work.

Given our focus on community content, not all data is nicely structured—or particularly helpful for determining sentiment. Plus, some platform-specific components (such as hashtags) may confuse the model. We found that removing these from the data we sent to our models gave us a boost in accuracy.

We chose to skip processing short messages of a few words since they carry little weight and don’t contain sufficient context to explain sentiment or topics. For long messages, we chose to shorten them if they were too long. Unlike reviews, which can have a useful summary at the end that carries a lot of weight and significance, the takeaways in conversational content are usually found early on in the message.

3. Prompting is part art, part science

The accuracy of model responses can vary widely based on your prompting. That makes prompt engineering crucial.

With that in mind, here are a few tips and tricks we picked up along the way:

- Give as much context as possible.

- Provide examples of what you’re looking for.

- Ask the model not to overfit by adding “do not answer if unsure.”

- Keep the model temperature 0 to avoid randomness and to get better chances of reproducibility of results.

- If you know the expected length of your output, specify it in maximum tokens.

- Ask the model to ask follow-up questions and give examples.

- Give the model your preferred output format so you can easily analyze the response.

- Group multiple questions together into one prompt.

Single question:

Common Room: Determine the sentiment for this message: “I am new with async functions and I'm trying to make multiple calls from an external API. Futures is not quite enough to retrieve the responses so I tried with asyncio and HTTPX, but the process is throwing an error unknown and difficult to debug for me. It seems that the coroutine is having an empty value or is never being called.”

AI model: Neutral.

Grouped question:

Common Room: Determine the sentiment for this message and explain what contributes to it: “I am new with async functions and I'm trying to make multiple calls from an external API. Futures is not quite enough to retrieve the responses so I tried with asyncio and HTTPX, but the process is throwing an error unknown and difficult to debug for me. It seems that the coroutine is having an empty value or is never being called.”

AI model: The sentiment of this message is frustration. The author is new to async functions and is having difficulty with the process, which is throwing an error that is unknown and difficult to debug. This contributes to the sentiment of frustration.”

4. Testing is personal

OpenAI provides metrics to measure the accuracy of fine-tuned models, but ultimately you’re going to know what works best for you and your product needs.

For example, our models are released across a wide range of different customers, which is why they’re generalizable. Since we’re more interested in having a higher precision than recall for most of our use cases, we measured and tuned our models for our metrics. Also, we decided on our metrics before we worked on the analysis so we wouldn’t run into problems from cherry picking metrics.

We’ve also learned it’s important to roll out models slowly so that early users have time to provide feedback. We allow customers to update categories and sentiment themselves, then we use that feedback for further model refinement.

5. APIs simplify everything

Running inference on our OpenAI models—the process of feeding them data and getting results back—via an API call is a key feature for us. This enables us to maintain zero infrastructure for model deployment. To further cut costs related to API calls, we cache all the responses to reduce duplicate calls.

We’re only scratching the surface of what AI technology can do—and how we can use it to make Common Room more valuable for our customers. The learnings we shared above are mainly related to labeling and multitask classification (in terms of machine learning problems).

Now we're embarking on generative AI work using GPT-4. We'll keep you updated on the tuning and validation techniques that worked best for us. There’s plenty more to learn, but hopefully the takeaways above will help you on your own product journey.

Good luck, happy prompting, and pleasant fine-tuning!

Put AI to work with Common Room

Ready to see how Common Room uses AI to help you drive awareness, adoption, and revenue? Get started for free today or book a demo.